A popular podcast did a segment the other day about AI in music. The conversation centered around the role of technology in music, and the inevitable threat it presents to creatives. They seemed to think AI could one day replace musicians.

It got me thinking about the future of AI in the music industry. How will robots and humans evolve with musical creation, consumption and performance?

Busy? Try the speed read.

The scoop Tech companies can use AI to compose new songs using existing datasets of music. This poses a serious threat to musicians and artists. Let’s talk about it.

About the tech

- AIVA Technologies, based in Luxembourg, created an AI that composes music for movies, commercials, games and TV shows.

- OpenAI’s Jukebox allows users to generate genre-specific music. You can look up an artist and select a genre. Theoretically, it would fuse that artist with a Mississippi the selected genre.

- Holograms tours are becoming increasingly popular. Eventually, using AI composition tools and hologram tech, deceased artists will be able to tour new music… and it will be hard to tell the difference from a standard pop concert.

- VOCALOID is a voice synthesizing software that allows users to create ‘virtual pop stars’. They are already widely popular in Asia.

- Other voice synthesizing tools allow users to imitate famous voices and spit out whatever output you’d like. Copyright law hasn’t caught up to this deepfake dystopian reality, so feel free to go make Jay-Z say whatever you want.

Humans > robots … for now At least for the foreseeable future, AI is incapable of creating music without mimicking an existing data set that originated from human innovation. Similar to the way AIVA pitched their product, Artificial Intelligence can be used to help the artist speed-up and maximize the composition process. It should be treated as a tool, not a replacement.

Zoom out There will always be a place for bipedal fleshbags in the arts. With or without AI in music. Why? Because the consumers of creation are also fleshbags, and we want to be wowed and wooed by the hairy, smelly creatures that feel and squeal just like we do.

What does this have to do with sustainability? Supporting a pro-human future (in the face of tech) is a critical component of a sustainable future. We need to develop new technologies in a way that prioritizes happiness and harmony over production and profit.

Dig deeper → 9 min

Computers making music with AI

If you didn’t already know, computers know how to make music. In fact, computers knew how to sing 50 years ago.

Today, AI can write full compositions. As a musician, it is admittedly hard to tell the difference between your favorite composer and a well-funded software tool. And I’m not talking about an EDM instrumental track.

We’re talking about a brand new, original Beethoven symphony, or another Beatles album. These machines use historical data sets and neural networks to recognize patterns and produce novel compositions.

A new Beatles album?

If we decided to input thousands of hours worth of audio tapes from Lennon, Harrison, McCartney, and Starr, and blasted it into a computer, the AI could shoot out it’s very own Beatles album like it was 1967 again. That includes lyrics and song titles, which are their own data set of words and replicable patterns, just like music notes.

Every song, every composition, no matter how innovative or genius it is, has a recognizable pattern. What does it all have in common? A rhythm, a melody, an accompaniment, vocals, instruments. In fact, most modern songs even have their own common structure:

Intro, verse, pre-chorus, chorus (or refrain), verse, pre-chorus, chorus, bridge (“middle eight”), verse, chorus and outro.

Pick your favorite pop or rock song off your shuffle playlist, and it is more than likely that your song follows that very structure.

Similarly, classical and jazz music have their own unique patterns and structures. A computer can process and form outputs for those genres too.

Let’s look at some examples.

AIVA Technologies: AI music composition

AIVA Technologies, based in Luxembourg, created an AI that composes music for movies, commercials, games and TV shows.

According to their website, AIVA’s mission is to empower individuals by creating personalized soundtracks with AI. Now, if you are an LA-based professional musician who produces music for movies, this new technology may not sound all that empowering. It sounds more like a threat.

It can take a composer weeks to write a composition for a client. If the Hollywood execs don’t dig your product, they’ll send you back to the studio. Come back later that month with a new composition based on the feedback.

With AI, they can simply hit re-enter and get an entirely new song. That brings me to our next company.

OpenAI’s Jukebox

OpenAI’s Jukebox allows users to generate genre-specific music. Essentially, you can look up an artist, say Michael Jackson, and select a genre, say blues rock, and theoretically, it would fuse Michael Jackson’s voice with a Mississippi Delta-inspired instrumental. Beat it Blues?

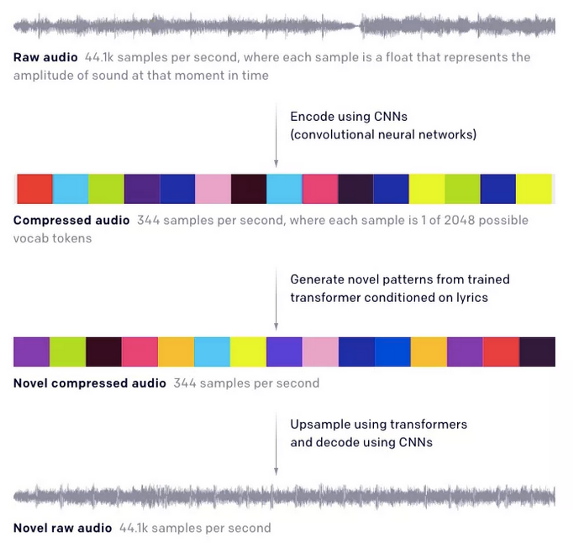

The technology encodes raw music, let’s say an .mp3 or .wav version of Bohemian Rhapsody, using convolutional neural networks (otherwise known as CNN’s, no not that network).

Neural networks for new music

CNN’s are a specific type of neural network that works well with visual imagery.

In this case, the software uses audio rather than visual data, but CNN is still the best option. Once the raw audio is encoded, the audio is compressed and processed by the AI, over and over again with each new input.

Lastly, Jukebox pushes out novel compressed audio based on the raw audio files and its recognized patterns.

This process allows users to cross-manipulate genres, artists, keys, etc.

Inevitably, the output will one day sound crystal clear just like any other new single you’d bump on Apple Music or Spotify. But for now, it’s pretty warbled because the audio was compressed so many times. Regardless, it’s a fun tool to use and I recommend checking it out.

You can play around with Jukebox here.

Virtual Pop Stars: Hatsune Miku

If you are well-versed in Japanese pop culture, than you probably know about Hastune Miku. Hastune Miku, code-named CVOI, is an AI anime pop star licensed out to companies around the world (like Domino’s) for commercial purposes.

A company called Crypton Future Media developed and distributed Hastune Miku. They used a voice synthesizing software called VOCALOID.

Hatsune Miku was built way back in 2007, and has since been discontinued, but ‘she’ was nevertheless widely popular. The pretend-pop-star poses serious questions about the future of music celebrities, specifically in over-commercialized genres like pop.

Hate it or love it, but most pop stars in 2020 are propped up for entertainment value, more-so than raw musical talent.

From a commercial perspective, why not build an AI pop star? You can have 100% control of their productivity, PR, and emotional disposition, without the antics (albeit understandable ones) associated with Cyrus or Bieber.

Creeped out yet? I got one more for ya.

Holograms + Voice synthesis = new album tours for dead musicians?

Right before COVID shook the world, Whitney Houston’s estate permitted a hologram tour for the late Pop icon.

The hologram looked super legit, and her virtual body sang and danced like it was 1987. Check out this video of her on stage in the UK with two dancers:

OK, now there is this other technology that uses speech synthesis based on audio inputs (just like music) to mimic someone’s voice. And pretty much any Average Joe with enough time on their hands and a laptop can do it.

So, if we input voice data from Notorious B.I.G and Tupac, we can make a new song using their voice.

You just give the machine some lyrics to read off from, and we could make Jay-Z rap the bible. Or, we could combine our favorite White Stripes lyrics with legendary rappers, like Vocal Synthesis did below:

The tech moves too fast

Copyright law hasn’t caught up to this new technology.

Back in April, YouTube removed a Jay-Z video from Vocal Synthesis for copyright infringement put forth by Rock Nation, LLC. But Vocal Synthesis struck back, arguing that it wasn’t a copyright violation at all.

Shockingly, YouTube reversed the decision and let the video stay up. Go ahead and make Jay-Z’s deep-fake voice say whatever you want. For now, there is nothing he can do about it.

Let’s use our imagination here.

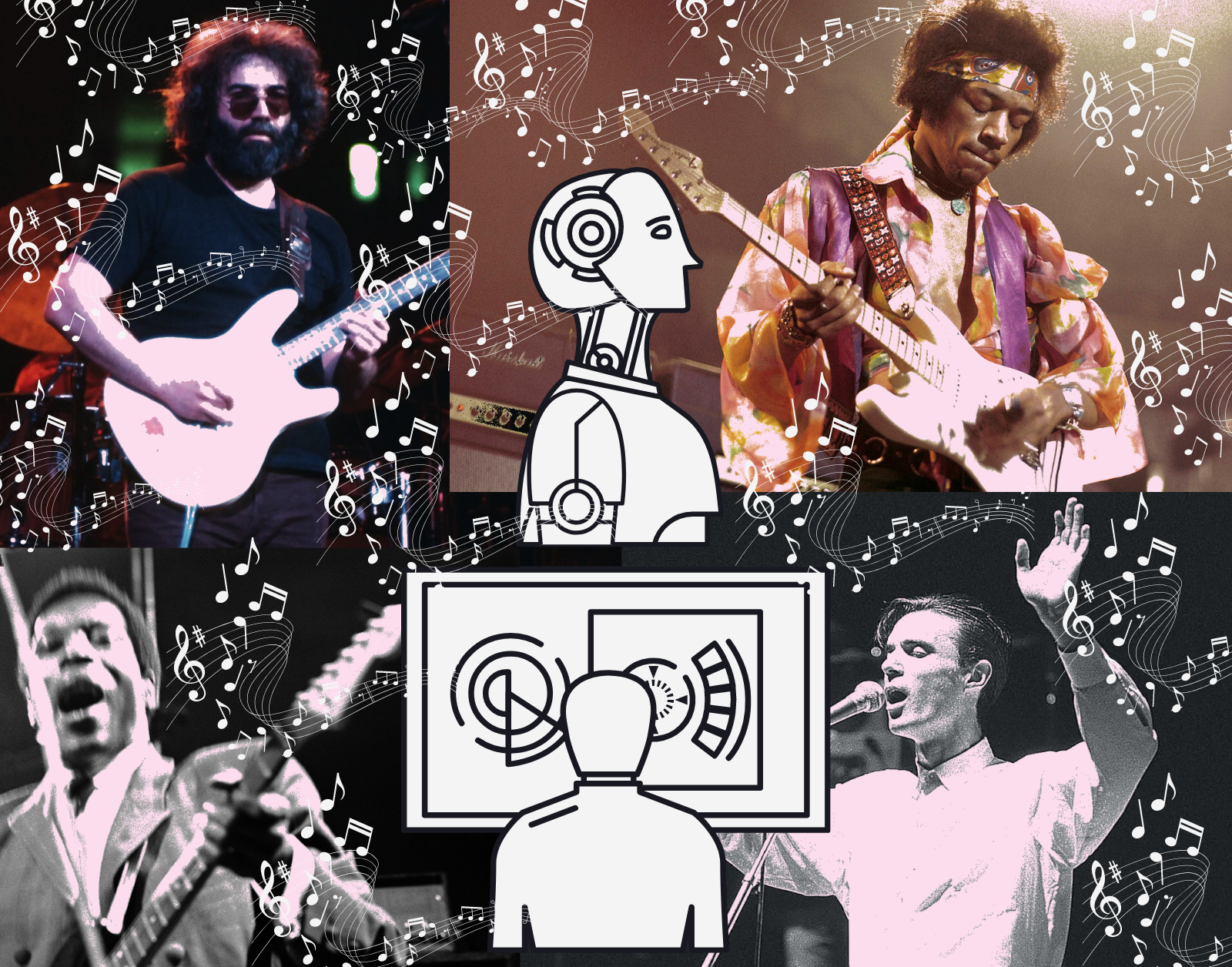

Using holograms, voice synthesis, and AI music composition tools, we can drop a new Beatles album, tour that new album, put up holograms that look like the original young stars, and it would be damn hard for the biggest Beatles fan to tell the difference from an old-man $250 Rolling Stones concert.

This is where folks start suggesting that robots will take over music and us measly human musicians are doomed forever. I’m here to tell you why that won’t happen.

AI mimics, humans imagine

At least for the foreseeable future, AI is incapable of creating music without mimicking an existing data set that originated from human innovation.

At some point, you can input tens of thousands of hours worth of music and get something entirely original. In other words, Artificial Intelligence would create something entirely groundbreaking in the arts, independent of human creativity.

But as the technology exists today, AI in music is limited by the genres, keys and time signatures that define its rules. Sure, Jukebox AI can bust out a Luke Bryant hit single in 30 seconds, but that hit single is based on the previous works of Luke Bryant. Artists don’t produce new music like that.

Finding inspiration in experiences

Consider the Beatles after India, the Grateful Dead after Egypt, or even the dark influence of heroin over John Coltrane. Those human experiences instigated new definitions and new perspectives for their music. Humans — musicians — artists are not linear thinkers. We derive inspiration from current and past events, abstract ideas, and unexplainable moments where words of poetry and musical phrases scream at our minds.

You can’t input “traditional Indian folk music” and “Revolver” (the album that preceded the Beatles’ trip to India) and expect a “Within You Without You” Beatles masterpiece.

Pink Floyd guitarist David Gilmour wrote “Shine on You Crazy Diamond” after his former best friend Syd Barrett lost his mind to LSD and schizophrenia in the 1960s. Bob Dylan wrote his brilliant “Masters of War” in response to the Vietnam War, or “Blowin’ in the Wind” in response to the civil rights’ movement, with the powerful line, “how many roads must a man walk down, before you call him a man?” The list goes on and on.

In contrast, new music produced with AI would use “Blowin’ in the Wind” as its source inspiration, rather than using a life experience to spark new ideas.

Those experiences could be something as deep as losing a parent, or as simple as walking past a cute girl. Those are the moments that manifest history’s greatest ballads.

Improvisation sweats and breathes

No one is looking to pay money, as far as I know, to watch a live 26-minute robot rendition of Scarlett Begonias / Fire on the Mountain. But 43 years later, 3.5 million people still want to listen to this one.

There is something magical and timeless about improvisation in music. Even when mistakes are made, it somehow amplifies the fan-musician relationship into a deeper, more intimate understanding.

Jerry Garcia was well-known for forgetting lyrics, cracking his voice or missing notes.

Some of the Dead’s most famous shows came from when Garcia was too sick to sing, so he had to jam extra well to make up for the lost voice. Those moments are inherently human. A choice for computer-music could never be as authentic and clumsy as its flawed anthropomorphic alternative.

Now to think of it, just last week my guitarist broke a string during an outdoor gig. We laughed and the crowd cheered. Isn’t that the beauty of it all?

A deeper meaning for music

Creativity stems from inspiration; it is not programmed or confined by the boundaries of linear thinking.

Live musical performances, for example, are designed by the present moment that music is being produced. The creative expressions put forth by a collection of human-manipulated instruments depend on the bad food we ate that morning, the nasty fight we got in the night before, the painful sunburn we got that afternoon, the family tragedy we faced that year.

All of those loves, losses, break-evens and wins are scrambled together into one medley outburst of raw emotional creativity. No code could match the unpredictable interface between an artist’s heart, mind and soul.

Let’s look at jazz music: the archetype of human improvisation, a genre entirely based on impromptu creativity. John Coltrane, the legendary saxophonist who battled with drug addiction his entire life, performed “My Favorite Things” differently each time.

But any musician who has performed in a band understands that the varying directions of his saxophone solos were based on the drummer’s fills, the bassist’s grooves, or the pianist’s chosen melodies.

It all sort of happens in one simultaneous telepathic “this is how we feel right now” that constantly changes with each player’s tap, hit, blow or pluck.

Using AI to amplify music

Similar to the way AIVA pitched their product, Artificial Intelligence can be used to help the artist speed-up and maximize the composition process.

If I want to write a folk-electronic song (think Mumford & Sons + Avicii), I could plug in what that may sound like, and the machine would give me a great foundation and source of inspiration that didn’t previously exist before this technology. Apply that same philosophy to writing lyrics.

In a commercial sense, business execs can rely on a machine to generate a catchy jingle. Yet, do decision-makers know what jingles work best for their business?

A professional musician can take an AI-generated song and take it a step further, adding that much-needed hot sauce to an otherwise dry burger.

There will always be a place for bipedal fleshbags in the arts. With or without AI in music. Why? Because the consumers of creation are also fleshbags, and we want to be wowed and wooed by the hairy, smelly creatures that feel and squeal just like we do.

No Comments